Introduction

JavaScript (which is defined by the ECMAScript specification) is a just-in-time-compiled, memory-managed (garbage collected), dynamically-typed, functional programming language. Its greatest practical strength is its use on the web as the primary client-side programming language. This means that nearly every computing device with a display serves as both a target platform and a potential development environment. That is powerful

and convenient.

The language is relatively elegant, but has an undeserved reputation for being confusing or awkward. This is perhaps due to large amounts of poorly-designed code available on the web and general misunderstandings about how the language works. As with every language that I know, the specification has some poor design decisions of its own, but these are mostly avoidable and are patched over by many implementations already (e.g., see

strict mode,

const,

let).

Learning any programming language means learning:

- the syntax and semantics

- the style of effective programs, with emphasis on idioms

- specific implementations (particularly, performance characteristics)

- the standard library

- common third-party libraries

A wealth of both good factual information and diverse opinions for the final three topics on JavaScript is currently available on the Internet (e.g., & N.B.:

MDN,

caniuse.com,

ecmascript,

jquery.com,

stackexchange.com). So, in this document I focus on the first two topics because I have found existing resources address them less well.

Anyone who already knows how to program well in some other language (i.e., a "sophisticated" programmer) should be able to program in JavaScript immediately after reading this document, and should be writing effective and elegant programs after about a week of experience. Also, those programmers accustomed to Python's list comprehensions or with experience in functional languages such as Scheme, ML, and Haskell may find JavaScript immediately familiar. To address the non-programmer audience, I am writing a short book on JavaScript as a first language, but unlike that book, this document is intentionally not for first-time programmers.

In addition to this article, I recommend Yotam Gingold's

example-based overview (which I use as a quick reference guide), the fantastic resource of

stackoverflow.com, the reasonable resource of

JavaScript: The Good Parts, verbose-but-correct

MDN, and the somewhat-questionable but conveniently terse resource of

w3schools.com.

I provide two additional resources for both the novice and experience JavaScript programmer. The

codeheart.js framework is a small library that provides function access to core data structure routines, argument checking, and smooths over implementation differences between browsers. I use it when teaching introductory programming and in my own work, even for commercial video games. The codeheart.js website includes many examples of both simple JavaScript programs and those that do some tricky things, such as access gamepads and the camera or perform complex algorithms.

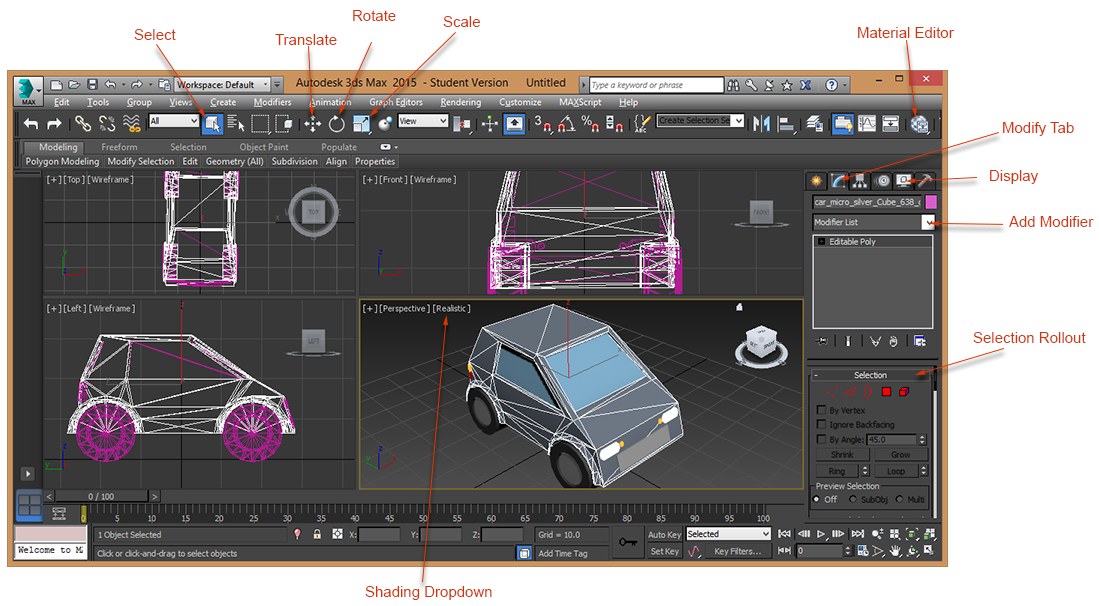

The Graphics Codex is a web and iOS reference app for graphics programming. It contains JavaScript, HTML, CSS, Unicode, and OpenGL information relevant to the JavaScript (graphics) programmer.

The most popular JavaScript interpreters are presumably those in web browsers. Be aware that there are other implementations available. The two I'm familiar with are

node.js, which was designed for server-side programming of client-server applications, and

Rhino, which was designed for embedding JavaScript within applications written in other languages.

Some common reasons for learning JavaScript are:

- Abstracting repetitive structure in web pages

- Adding interactive features (such as form error checking) to web pages

- Implementing full applications that run within a web browser, including real-time games

- Implementing WebGL programs, which are largely written in a language called GLSL but require JavaScript to launch the GLSL threads

![]()

To accomplish the tasks implied by those reasons, one obviously has to learn about the library conventions for accessing the HTML document tree data structure (called the "DOM") or the WebGL library. For the DOM, I recommend reading the

formal specification and the more colloquial

quirksmode and

w3schools descriptions. The

WebGL specification is understandable (although not particularly pleasant reading) for those already familiar with OpenGL. For those new to GL, I recommend Mozilla's

documentation.

Three Simple Examples

JavaScript begins executing at top level and declarations can be mixed with execution; there is no "main" function. The HTML

script tag embeds JavaScript in a web page for evaluation by a browser:

<script src="file.js"></script>

<script>

// Inline javascript here

</script>

Browser Output

To run this example in a web browser, create two files. The first is index.html with contents:

<html>

<body>

<div id="output"> Some output: <br/> </output>

<script src="example.js"></script>

</body>

</html>

The second file is example.js, with contents:

alert("hi"); // Pop up a modal dialog

var i;

for (i = 0; i < 10; i += 2) {

console.log(i); // Print to the developer console

}

// Emit marked-up into the HTML document

document.write("<b>Hello world</b>");

// Assume that a tag of the form <div id="output"></div> already appeared

// in the HTML

document.getElementById("output").innerHTML += "<i>More</i> information";

Now, load index.html in your web browser and open the JavaScript Console (a.k.a. Developer Console, Error Console, and so on in different browsers) to see the result of the

console.log statements. This console (a.k.a. "interaction window", "read-eval-print-loop", REPL) is

useful for debugging and experimentation. You can enter code at it for immediate execution. That code can reference any global variables, as well as declare new variables. This allows inspection of any exposed state (

console.dir even prints values in an interactive tree view!) as well as experimentation with functionality before committing it to the main program. Many browsers also have built-in debuggers and profilers, but this immediate interaction with your code is often the best place to start investigations. Browser consoles that have command completion also allow discovery of new features during experimentation.

Insertion Sort

function insertionSort(a) {

var i, j, temp;

for (j = 0; j < a.length; ++j) {

for (i = j + 1; i < a.length; ++i) {

if (a[i - 1] > a[i) {

// Swap

temp = a[i - 1]; a[i - 1] = a[i]; a[i] = temp;

}

}

}

}

Derivatives

function derivative(f) {

var h = 0.001;

return function(x) { return (f(x + h) - f(x - h)) / (2 * h); };

}

console.log(" cos(2) = " + Math.cos(2));

console.log("-sin(2) = " + Math.sin(2));

console.log("(d cos/dx)(2) = " + derivative(Math.cos)(2));

function f(x) { return Math.pow(x, 3) + 2 * x + 1; };

var x = 12.5;

console.log("[6x | x = 12.5] = " + (6 * x);

console.log("(d^2 (x^3 + 2x + 1)/dx^2)(12.5) = " +

derivative(derivative(f))(x));

Types

JavaScript is a dynamically-typed language, so values have types but variables may be bound to any type of value. The value domain of JavaScript is:

- Immutable unicode-capable string (uint8 characters with escape sequences; string length may not be what you expect)

- Boolean

- 64-bit floating point (a.k.a. "double precision") number

- First-class, anonymous function

- Apparently expected, amortized O(1)-time access table (a.k.a. "dictionary", "map", "hash map", "hash table") mapping strings to values, called object. Non-string keys are coerced silently.

- A null value

- An undefined value, which is returned from an object when fetching an unmapped key

Those seven types define the semantics of JavaScript values. Several other types are provided for efficiency within implementations. Except for some possibly-accidental exposure of limitations on those, they act as optimized instances of the above types. These optimized types are:

- Array of values. This is semantically just an object that happens to have keys that are strings containing integers, however when allocated as an array and used with exclusively integer keys the performance is what one would expect from a true dynamic array (a.k.a. "vector", "buffer").

- Native functions, provided by the interpreter.

- Typed numeric arrays: Uint8Array, Int8Array, Uint8ClampedArray, Uint16Array, Int16Array, Uint32Array, Int32Array, Float32Array, and Float64Array. These are objects that use efficient storage for numeric types and necessarily support only limited get and set functionality. Functions that operate on normal arrays do not work with these types.

As implied by its name, the "object" type is frequently employed as a structure holding fields that are accessed by name. JavaScript has some constructs that support object-oriented programming based around these structures. There are no classes or inheritance in the Java or C++ style, however functions and objects can be used to emulate much of that style of programming, if desired. I discuss some of the idioms for this later in this document.

Syntax

JavaScript syntax is largely similar to that of C. It is case-sensitive, whitespace is insignificant, except in

return statements, and braces are used for grouping. Semicolons are technically statement

separators instead of statement

terminators and are optional in many cases. Like many people, I happen to use them as if they were terminators to avoid certain kinds of errors and out of C-like language habits.

I find it easier to learn syntax from concrete examples than formal grammars. A slightly-idiomatic set of examples (e.g., braces aren't required around single-expressions except in function declaration) of core JavaScript syntax follows. I recommend reading the actual grammar from the specification after becoming familiar with the syntax.

Expressions

3 // number

3.14 // number

"hello" // string

'hello' // Single and double-quoted strings are equivalent

true // boolean

function(...) { ... } // Anonymous function literal

{"x": 1, "name": "sam"} // Object literal

{x: 1, name: "sam"} // Object literal with implicit quotation of keys

[1, 2, "hi"] // Array object literal

null // Null literal

These can be nested:

{material:

{color: [0.1, 1, 0.5],

tile : true},

values: ["hello", function(x) { return x * 2; }, true, ['a', 'b']]

}

Technically, the following are protected global variables and not literals:

Infinity

NaN

undefined

That technicality matters because the serialization API,

JSON, provides no way to serialize undefined or floating-point special values (it also provides no way to serialize function values, although that is less surprising).

JavaScript provides common C-like operators:

a + 3

a + "hello" // String concatenation

a - b

a * b

a / b // Always real-number division, never integer division. Use Math.floor if you want an integer...

a++

++a

a % b // Floating-point modulo. Use Math.floor first if you want integer modulo

a -= b

a += 3

a += "hello" // Shorthand for a = a + "hello"; does not mutate the original string

// etc.

a >> 1 // Bit shift left; forces rounding to 32-bit int first

a << 1 // Bit shift right; forces rounding to 32-bit int first

a > b

a >= b

! a

a != b

a == b

a !== b

a == b

a || b

a && b

// etc.

a ? b : c // Functional conditional

As is the case in most languages, the logical "and" (

&&) and "or" (

||) operators only evaluate the left operand, if it determines the value of the expression. Undefined, null, false, zero, NaN, and the empty string all act as false values in logical expressions.

The empty object (or empty array, which is an object) acts a true value in logical expressions. Logical "and" and "or" return one of the operands, as is common in dynamically-typed languages. For example, the expression

32 || false evaluates to

32.

There are two versions of the equality operator.

Triple-equals (===) compares values in a similar way to the C language, and is almost always the operator desired for an equality comparison. Double-equals (

==) is equality comparison with coercion, so the expression

3 == "3" evaluates to true. The not-equals operator has the same variant.

Exponentiation and other common math functions are mapped by the global object named

Math:

Math.pow(b, x) // b raised to the power of x

Math.sqrt(3)

Math.floor(11 / 2)

// etc.

Parentheses invoke functions:

y(...) // Invoke a function

(function(...) {...})(...) // Invoke an anonymous function at creation time

Note that

extra parentheses are required around an anonymous function that is immediately invoked, due to an ambiguity in the syntax of the combined function declaration and assignment. As Jeremy Starcher points out in the comments below (thanks!), any operator is sufficient to force invocation of the function, but parentheses probably the most convenient and clear.

Function invocation syntax leaves another apparent ambiguity: is the above

expr expr or a function applied to its arguments? The language is defined to have the above invoke the function and there is no way to express two adjacent expressions, one of which is a function literal and both of which have ignored values.

Extracting a key from an object is accomplished with:

a[b] // Get a reference to the value mapped to key b from a

a.hello // Shorthand for a["hello"]

Those are legal right-hand values for assignment operators:

student["fred"] = { name: "fred", height: 2.1};

body.leftarm.hand = true;

sphere.render = function(screen) { drawCircle(screen, 100, 100, 3); }

Statements

// Single line comment

/* multi-line comment */

var x; // Declare a variable in the current scope

var x = 3; // Declare and assign

if (...) { ... } // Conditional

if (...) { ... } else { ... } // Conditional

switch (...) { case "a": ... break; ...} // Conditional

while (...) { ... } // Loop

for (...; ...; ...) { ... } // Loop

return 3; // Return from a function

x = true; // Assignment (an operator--any expression can be made into a statement by a semicolon)

throw ...; // Throw an exception

try { ... } catch (e) { ... } // Catch an exception

function foo(...) {...} // Shorthand for var foo = function(...) {...};

Within a loop,

continue and

break skip to the next iteration and terminate the loop, respectively. As previously mentioned,

the return statement is sensitive to whitespace. A newline after a return ends the statement, so the returned expression must begin on the same line as the

return itself to be included.

Functions are the only mechanism for creating scope in JavaScript. Specifically, braces {} do

not create a new scope line the way that they do in C++. This means that the variable defined by a

var statement exists within the entire scope of the containing function. I declare all variables at the top of a function (like a C programmer) to avoid accidentally creating the same variable inside two different sets of braces.

Furthermore, writing to a variable that has not been defined automatically declares it in global scope, which can turn an accidental missing declaration into a serious program error. Fortunately, most browsers support an opt-in "

strict mode", in which this is an error.

JavaScript identifiers are a superset of those from the C language because they allow dollar sign ($) and unicode characters to be treated as letter characters. Beware that the popular jQuery and Underscore.js libraries by default bind $ and _ as the most frequently used variable. So, you may encounter code like

$("p").hide(), in which one might expect that the dollar sign is an operator but it is in fact simply a function that happens to act as half of a particular kind of tree iterator useful for document manipulation.Beware that, while there are few

reserved words,

some variables (and accessors that use variable syntax) are immutably bound in the global namespace (the "window" object) by the web browser. The one that often catches game programmers off guard is "location".

The following code causes the browser to redirect to a page ("spawn point") that does not exist, rather than reporting an error that the variable has been declared twice or doing what the programmer probably expected of simply storing a string:

var location = "spawn point";

Function calls eagerly evaluate all arguments, which are then passed by value. To return values, one must either use the

return statement or pass an object (or array object) whose fields can be mutated. All arguments are optional and those lacking actual parameters are bound to

undefined. To support arbitrary numbers of arguments, an array-like collection of all arguments called

arguments is bound within a function call. To return multiple values from a function either return an object (e.g., an array), or

pass in an object or array whose contents can be mutated.

OOP with this and new

JavaScript has two special expressions that provide a limited form of object-oriented behavior:

this and new. You can write an awful lot of useful JavaScript without reading this section, and are more likely to write understandable and debuggable code if you don't use the features described here.

JavaScript's design is really that of a functional programming language, not an object-oriented language, and thinking in terms of functions instead of objects will usually lead to more elegant code. ("

Objects are a poor man's closure.")

The expression

this evaluates to an object and cannot itself be explicitly assigned--it is not an identifier for a variable. In a function invoked by the syntax

fcn(...), and at top level, this evaluates to the object representing the browser window. It has keys for all global variables, including the library built-ins (most of which can be reassigned).

In a function invoked by the syntax obj.fcn(...), that is, where the function expression was returned as a value within an object, this evaluates to the object within the scope of the function. Thus, one can use it to create method-like behavior with the idiom:

var person = {height: 1.5, name: "donna"};

person.grow = function(amount) { this.height += amount; }

person.grow(0.1);

In this example, the "grow" key in the "person" object maps to a function that increments the value bound to the "height" key in the "person" object. One could create multiple objects that share a the same function value.

JavaScript also binds this during the somewhat bizarre syntax and idiom:

function Farm(c) {

this.corn = 7;

this.wheat = 0;

this.cows = c;

}

var f = new Farm(3);

When the new keyword precedes the function expression in function invocation,

this is bound to a new object and the value of the expression is that object, not the return value of the function. This is typically employed as a combination class declaration and constructor. Note that the

Farm function could bind other functions to keys of

this, creating an object that acted much like a Java or C++ class with respect to the

object.method(...) syntax.

Furthermore, an object can be chained to another object, so that keys that are not mapped by the first object are resolved by the second. In fact, in the above example, the object bound to

f is chained to an implicitly-created object

Farm.prototype. Any keys bound on that prototype act as if they were bound on

f, so it acts very much like the "class" of

f. The prototypes themselves can be chained, creating something that feels a bit like the classical inheritance model (or...lexically scoped environments).

I find that object-oriented programming in any language is a tool that frequently clarifies code at first by grouping related state and computation. As a program grows, the boundaries between clusters grow complex and ambiguous, and object-oriented programming often seems less attractive. The fundamental problem is that the most powerful computation mutates state from multiple objects and has no natural class to own it. I observe the same properties in this prototypal inheritance, but magnified. I use it, but sparingly, and almost always rely on first-class functions for dynamic dispatch instead of explicit objects. In C++ and Java one doesn't have that alternative, so OOP is often the right, if imperfect model in those languages.

If you prefer the object-oriented style of programming for JavaScript, I highly recommend

Douglas Crockford's articles on the topic.

Idioms

The key to effective JavaScript programming is appropriate use of functions and objects, often through functional programming idioms. Here are two heavily-commented examples demonstrating some of these idioms.

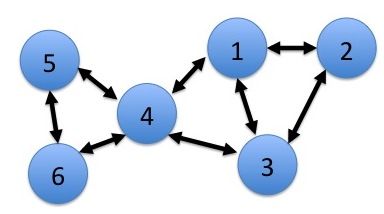

Graph

use strict;

// The common (function() {...})() idiom below works around ambiguous

// syntax for immediately invoking an anonymous function,

// as previously described.

var makeNode = (function() {

// This state is private to makeNode because it is a local

// variable of the function within which the actual makeNode

// function was defined. This is one way to make "private"

// state.

var lastID = 0;

return function makeNode(v) {

++lastID;

// Each node has three properties, a unique ID, an array of

// neighbors, and a value. We choose to make the node's fields

// immutable.

return Object.freeze({id: lastID, neighbors: [], value: v});

};

})();

/* Can the target node be reached from this starting node? */

function reachable(node, target) {

// A third argument was secretly passed during recursion.

// This avoids the need to declare a helper procedure with

// different arguments. Note the use of the OR operator

// to return the first non-undefined value in this expression.

var visited = arguments[3] || {};

if (visited[node.id]) {

// Base case

return false;

} else {

// Note how we're using the object as a hash table

visited[node.id] = true;

// Think functionally instead of imperatively, which often

// means recursively. The target is reachable if we are at

// it, or if it can be reached from one of our neighbors. Use

// the built-in iterator (a reduction) to traverse the

// neighbors. Note that we use a first-class, anonymous function

// to specify what operation to apply within the iteration.

return (node.id == target.id) ||

node.neighbors.some(function(neighbor) {

// Pass the third argument to the recursive call.

return reachable(neighbor, target, visited);

});

}

}

Note the use of the Array.some function above. Although JavaScript provides some of the usual functional programming utility routines (e.g., map, fold), you'll often either have to write them yourself or using a library such as

Underscore.js to bring them in. Underscore is very nicely designed, but I can never remember the conventions of all of the variants, so I simply use the big hammer of codeheart.js's

forEach, which has just enough functionality to emulate most forms of iteration.

My

A* (shortest path) example demonstrates one way to define the graph itself using first-class functions, in order to generalize a graph algorithm without either fixing the underlying representation or forcing an OOP style on the program.

Module

There are several ways of using functions to group state and implement module-like functionality. The simplest is to have modules be objects that contain functions (and constants, and variables...).

use strict;

var module = (function() {

// This cannot be seen outside of the module, so it will not create namespace

function aPrivateFunction(z) { return Math.sqrt(z); }

// Freezing and sealing are two ways to prevent accidental mutation of the

// module after creation.

return Object.freeze({

// These are accessible as module.anExportedFunction after the definition

anExportedFunction: function(x, y) { return x + aPrivateFunction(y); },

anotherExportedFunction: function(x) { return 2 * x; }

});

})();

Another variation mutates the global namespace object:

use strict;

// Use function to create a local scope and persistent environment

(function() {

function aPrivateFunction(z) { return Math.sqrt(z); }

// Mutate the global environment

this.anExportedFunction = function(x, y) { return x + aPrivateFunction(y); };

this.anotherExportedFunction = function(x) { return 2 * x; };

})();

Performance

No-one chooses JavaScript for its performance. The language is inherently slow relative to C because it is dynamically typed, can be interrupted for memory management at any time, performs a hash table fetch for nearly every variable access, and forces the use of 64-bit floating point. However, the latest JIT compilers produce remarkably fast code given those constraints if one structures a program in a way that is amenable to certain optimizations. Numerically-dense code and string manipulation can perform comparably to Java, and when using WebGL, graphics programs may run nearly as fast as those written in C.

As is the case in most high-level languages, the primary performance consideration is memory allocation. Avoid allocating memory or allowing it to be collected within loops. This may mean mutating values instead of returning new ones, or ping-ponging between two buffers. My

simple fast ray tracer is an example of minimizing heap allocation in the main loop. In that case, the program ran 25x faster than the nearly-equivalent

slower version that allocated memory for each 3D vector operation instead of mutating a previous vector in place. (The fast version also replaces some generic codeheart.js utility methods with specialized ones.)

Wherever possible, rely on standard library functions that are backed by native code instead of re-implementing them in JavaScript. For example, the string, regular expression, and array manipulation functions may be an order of magnitude faster than what can be implemented within the language.

It is common practice for developers of large JavaScript libraries to "minify" their source code before distributing it. This process renames most variables to a single letter, eliminates whitespace and comments, and makes other reductions to the size of the source code. In some cases, this is effective obfuscation of the source code. I do not recommend this practice as a performance optimization for small scripts. It is unlikely to have a significant impact on execution time because parsing is rarely a performance bottleneck and most web servers today send content across the network using gzip compression. Furthermore, renaming variables can break JavaScript programs that take advantage of the fact that there are two syntaxes for key lookup within objects, or ones that share variables with other scripts.

For canvas (vs. WebGL) graphics, drawing images is very fast compared to drawing other primitives such as lines and circles. Consider pre-rendering images of circles and then drawing the image, rather than forcing software circle rasterization at run time. When WebGL is practical to employ, use that instead of software canvas graphics for a tremendous performance boost.

Arithmetic on numbers entered as integer literals is performed at integer precision in

many implementations, which is substantially faster than full 64-bit floating point precision specified by the language. Very recent (as of 2014) JavaScript implementations also support

Math.fround, which notifies the compiler that floating-point arithmetic can be performed at 32-bit precision.

The typed arrays (e.g., Float32Array) provide significantly better cache coherence and lower bandwidth than general arrays. My

Show me Colors sample program performs the main image processing operations on typed arrays and is able to run in real time on a live video feed because of this optimization. If operating on large arrays of numbers, use them. If operating on an array of structures that use numeric fields, invert the structure of the program:

// Before:

var objArray = [{x: 1, y: 1, z: 2}, ..., {x: 4, y: 0, z: 3}];

// After:

var x = new Float32Array(1, ..., 4);

var y = new Float32Array(1, ..., 0);

var z = new Float32Array(1, ..., 3);

JavaScript provides limited multithreading support through the

web workers API. This is ideal for applications that perform significant independent computations where the end result is small, for example, computing the average value of a large array that is downloaded from a server. The input and output must be small to realize this advantage because the web workers API marshalls all data through strings for inter-thread communication.

Finally, clear and simple code is often fast code. JavaScript code isn't going to be terrifically fast anyway, so don't exchange code clarity for a modest performance gain. Only complicate code when the performance gain is disproportional to the added complexity and in a truly critical location.

I thank John Hughes and the commenters below for their edits.

![]() Morgan McGuire (@morgan3d) is a professor of Computer Science at Williams College, visiting professor at NVIDIA Research, and a professional game developer. His most recent games are Rocket Golfing and work on the Skylanders series. He is the author of the Graphics Codex, an essential reference for computer graphics now available in iOS and Web Editions.

Morgan McGuire (@morgan3d) is a professor of Computer Science at Williams College, visiting professor at NVIDIA Research, and a professional game developer. His most recent games are Rocket Golfing and work on the Skylanders series. He is the author of the Graphics Codex, an essential reference for computer graphics now available in iOS and Web Editions.

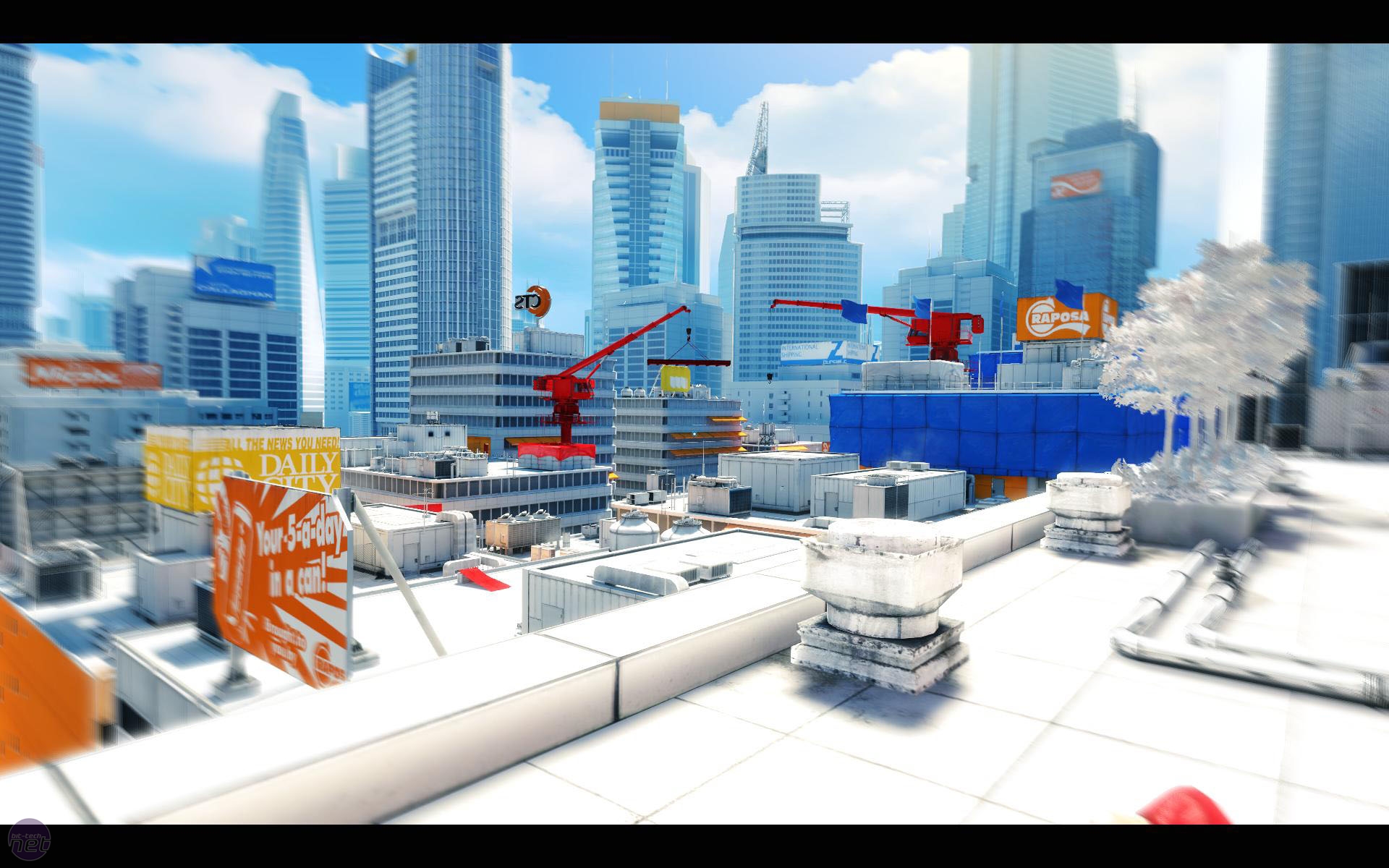

The method that we describe is a simple modification to a previous post-processing technique by David Gilham from ShaderX5. Our technique is simple to implement and fast across a range of hardware, from modern discrete GPUs to mobile and integrated GPUs to Xbox360 generation consoles. As you can see from the screenshot above, the depth of field effect is key to both framing the action and presenting the soft, cinematic look of this game. Scaling across different hardware while providing this feel for the visuals was essential for the game. "Simple to integrate" means that it requires only a regular pinhole image and depth buffer as input, and that it executes in three 2D passes, two of which are at substantially reduced resolution. The primary improvement over Gilham's original is better handling of occlusion, especially where an in focus object is seen behind an out of focus one.

The method that we describe is a simple modification to a previous post-processing technique by David Gilham from ShaderX5. Our technique is simple to implement and fast across a range of hardware, from modern discrete GPUs to mobile and integrated GPUs to Xbox360 generation consoles. As you can see from the screenshot above, the depth of field effect is key to both framing the action and presenting the soft, cinematic look of this game. Scaling across different hardware while providing this feel for the visuals was essential for the game. "Simple to integrate" means that it requires only a regular pinhole image and depth buffer as input, and that it executes in three 2D passes, two of which are at substantially reduced resolution. The primary improvement over Gilham's original is better handling of occlusion, especially where an in focus object is seen behind an out of focus one.

Morgan McGuire is a professor of Computer Science at Williams College, visiting professor at NVIDIA Research, and a professional game developer. He is the author of the Graphics Codex, an essential reference for computer graphics now available in iOS and Web Editions.

Morgan McGuire is a professor of Computer Science at Williams College, visiting professor at NVIDIA Research, and a professional game developer. He is the author of the Graphics Codex, an essential reference for computer graphics now available in iOS and Web Editions.

.png)